- History of ANN

- ANN: Definition

- Advantages of Neural Networks

- Basic Models of ANN

- Important Terminologies of ANNs

Artificial Neural Network

History of Artificial Neural Networks (ANNs)

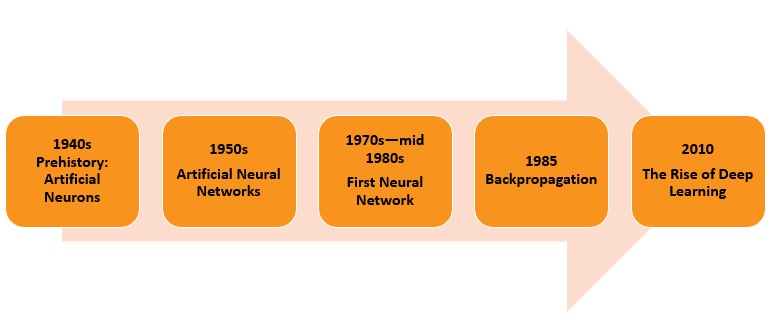

ANNs have a history of more than 80 years beginning from the year 1940.Milestones of ANNs are as follows:

- Artificial Neurons (1940S): The first artificial neurons were created in the 1943s by Walter Pitts and Warren McCulloch, two scientists who studied logic and neurobiology, to demonstrate how straightforward structures might imitate logical operations.

- Artificial Neural Networks (1950s): In 1957, Frank Rosenblatt, a research psychologist affiliated with Cornell Aeronautical Laboratory, devised the Perceptron, which consisted of a solitary layer of neurons. This innovative development aimed to address the research findings presented in the influential paper authored by Warren McCulloch and Walter Pitts. The Perceptron's primary function involved the classification of images containing a limited number of pixels, typically in the range of a few hundred. This could be considered as the antecedent of modern neural networks.

- First Neural Network Winter (1970 – mid 1980s): The 1969 publication of Perceptrons: An introduction to computational geometry by MIT professors Marvin Minsky and Seymour Papert provided evidence of the Perceptron's limitations. The first "neural network winter" didn't occur until the mid-80s as a result of this.

- Backpropagation (1985 – mid 2000s): The earlier networks were single layer perceptron and could not perform complex tasks. An technique called backpropagation, also known as backward propagation of mistakes, is created to check for errors as they travel backward from input nodes to output nodes. For data mining and machine learning to increase the precision of predictions, it is a crucial mathematical tool.

- Deep Learning (2010 onwards): The decade of the 2010s witnessed a significant surge in the field of deep learning. During the early 2010s, several scholarly investigations advocated for the utilization of a particular activation function, namely Rectified Linear Unit (ReLu), within neurons for the purpose of information transmission (refer to Nair, V. and Hinton, G. E. (2010)). The utilization of improved optimization algorithms, such as ADAM, facilitated the efficient training of deeper neural networks. The revolution commenced in 2012 with the initiation of the ImageNet Large Scale Visual Recognition Challenge, a prominent data science competition aimed at the classification of images. A team, under the leadership of Alex Krizhevsky from the University of Toronto, accomplished a remarkable feat by achieving an error rate of 15.3%, which stands unparalleled. In comparison, the second-best model achieved a significantly higher error rate of 26.2%. Krizhevsky employed convolutional neural networks, a distinct form of neural network, in his research.

×

![]()