- Introduction to Deep Learning

- Convolution Neural Network

- Architecture & Transfer Learning

- VGGNet

- GoogleNet

- ResNet

- Inception Net

- RCNN

- YOLO

Deep Learning Algorithms

Definition of Deep Learning

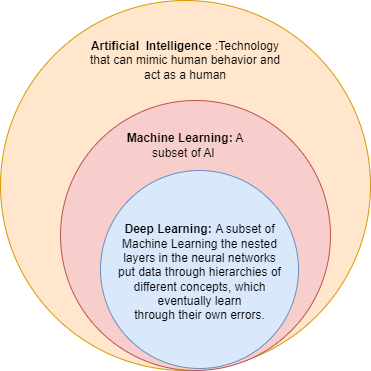

Deep Learning is a subset of machine learning where algorithms are developed and function similar to those in machine learning, but there are numerous layers of these algorithms- each providing a different interpretation to the data it feeds on.

Deep learning models are composed of multiple layers of artificial neurons called "neural networks." These networks are designed to process and transform input data through a series of interconnected layers, each performing specific computations. The networks learn from examples and adjust the connections (weights) between neurons to improve their performance.

The evolution of deep learning has been marked by significant advancements and milestones. Here is a brief overview of the key developments in the field:

- Artificial Neural Networks (ANNs): The foundation of deep learning can be traced back to the 1940s and 1950s when the concept of artificial neural networks was first introduced. These early neural networks were simple and consisted of a few interconnected artificial neurons.

- Perceptron (1957): The perceptron, developed by Frank Rosenblatt, was one of the earliest and most influential neural network models. It was a single-layer neural network capable of binary classification tasks. The perceptron rule, also known as the delta rule, was used to adjust the weights of the connections between neurons during training.

- Backpropagation (1974): The backpropagation algorithm, although initially proposed by Paul Werbos in 1974, gained significant attention after being independently rediscovered and popularized by David Rumelhart, Geoffrey Hinton, and Ronald Williams in the 1980s. Backpropagation enabled efficient training of multi-layer neural networks by iteratively adjusting the weights based on the gradient of the error function.

- Deep Belief Networks (DBNs): In the 1990s, Geoffrey Hinton and his colleagues introduced deep belief networks, which combined unsupervised learning (using restricted Boltzmann machines) with supervised learning. DBNs were capable of learning hierarchical representations of data and paved the way for the resurgence of deep learning.

- Convolutional Neural Networks (CNNs) (1980s): CNNs were introduced by Yann LeCun and colleagues in the 1980s. CNNs are particularly well-suited for image processing tasks due to their ability to exploit the spatial structure of data. CNNs achieved breakthrough results in areas such as image classification and object recognition.

- Recurrent Neural Networks (RNNs) (1980s): RNNs, developed in the 1980s, are designed to process sequential data by maintaining internal memory. This property makes them suitable for tasks such as speech recognition, language modeling, and machine translation. However, RNNs faced challenges with long-term dependencies, which led to the development of more advanced architectures like Long Short-Term Memory (LSTM) and Gated Recurrent Units (GRUs).

- Deep Learning (2000s): Deep learning, a subfield of machine learning, focuses on training deep neural networks with multiple hidden layers. The depth of the networks allows them to learn hierarchical representations of data, enabling impressive performance on a wide range of tasks. The availability of large labeled datasets, powerful GPUs, and advancements in computing technology have been instrumental in the resurgence of deep learning.

- Big Data and Computational Power: The availability of vast amounts of labeled data, along with advancements in parallel computing and graphical processing units (GPUs), significantly contributed to the success of deep learning. Deep learning models thrive on large-scale datasets, enabling them to learn complex patterns and generalize well.