- Introduction to Deep Learning

- Convolution Neural Network

- Architecture & Transfer Learning

- VGGNet

- GoogleNet

- ResNet

- Inception Net

- RCNN

- YOLO

Deep Learning Algorithms

Definition of Deep Learning

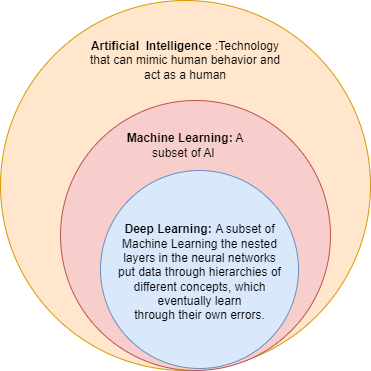

Deep Learning is a subset of machine learning where algorithms are developed and function similar to those in machine learning, but there are numerous layers of these algorithms- each providing a different interpretation to the data it feeds on.

Deep learning models are composed of multiple layers of artificial neurons called "neural networks." These networks are designed to process and transform input data through a series of interconnected layers, each performing specific computations. The networks learn from examples and adjust the connections (weights) between neurons to improve their performance.

Deep learning to make sense of complex, high-dimensional, non-linear, huge datasets, where the model has many, many hidden layers between them and has a very complex architecture, helps us to make sense of and extract knowledge from such complex, big datasets.

Difference between Machine Learning and Deep Learning

Machine learning and deep learning are both subfields of artificial intelligence (AI) that involve training models to make predictions or perform tasks. However, they differ in their approach, complexity, and the types of problems they are best suited for. Here are the key differences between machine learning and deep learning:

- Data Requirements: Machine learning models can perform well with relatively smaller datasets, especially when the features are well-defined and selected appropriately. Deep learning models typically require large amounts of labeled training data to achieve high performance. The availability of big data has been crucial to the success of deep learning, as it allows the models to learn complex patterns and generalize effectively.

- Architecture and Model Complexity: Machine learning models typically consist of algorithms that learn from and make predictions or decisions based on patterns in data. These models require explicit feature engineering, where domain experts manually select and engineer relevant features from the input data. Deep learning models, specifically deep neural networks, are composed of multiple layers of interconnected artificial neurons. These networks can automatically learn and extract hierarchical representations or features from raw data, eliminating the need for explicit feature engineering. The complexity and depth of deep neural networks allow them to capture intricate patterns in large-scale datasets.

- Feature Engineering: In machine learning, feature engineering plays a crucial role. Domain experts manually design and select relevant features from the input data, which are then used as inputs to the learning algorithms. This process requires expertise and domain knowledge. Deep learning models can automatically learn and extract features from raw data. The networks learn hierarchical representations of the data by themselves, without the need for explicit feature engineering. This characteristic is particularly advantageous when dealing with high-dimensional and unstructured data, such as images, audio, and text.

- Performance on Complex Tasks: Machine learning models can perform well on a wide range of tasks, including classification, regression, clustering, and recommendation systems. They are often effective when the relationships between input features and output predictions are relatively simple.Deep learning models excel in tasks that involve complex patterns and dependencies, especially in domains such as computer vision, natural language processing, and speech recognition. Deep learning's ability to learn hierarchical representations enables it to capture intricate details and abstract features from data.

- Computational Resources: Machine learning models tend to be computationally less demanding compared to deep learning models. They can often be trained and deployed on standard hardware or less powerful devices. Deep learning models, especially large-scale architectures, require substantial computational resources, including high-performance GPUs or specialized hardware. Training deep neural networks can be computationally intensive and time-consuming.